My first reaction was "Too many pages" and "Too much nannyism". PseudoContext is festering all over the place. Bloat is a problem, too. The textbook industry has jumped the shark.

HMH sent out invitations to view their new Larson books for Algebra 1,2 and Geometry and immediately it strikes me: Larson, really? Did Larson write any of those textbooks? I doubt he even opened a single chapter document, never mind actually write one, but I digress ...

Let's take a look at this in this new and shiny, Common Core Standards Compliant Algebra II textbook that is so extensively resourced as to be exhaustive ... a curriculum ad nauseum. The images show the incredible list of stuff that comes with this thing.

Bloat: 1220 pages - really? Even if you exclude the 300 pages of appendices, answers, introductory material and glossary, you still have 900 pages of information and material. That's about 5 pages a day, six if you don't count all those exam days and assemblies and such. Seems excessive.

I like having the Pre-AP and Remediation guide because my Guidance Department can't seem to schedule students intelligently and everyone is under the impression that dumping every level of ability into the same room is appropriate.

The Spanish Assessment Book seems wrong for many reasons. First, Spanish is a common second language but it's by no means the only one. Second, the rest of the materials are in English so having a test in Spanish doesn't seem necessary:

Seems that any teacher who is dealing with these kids on a day to day basis would be able to handle that in English. If you're able to teach the material, then you're able to create a test that the kids can understand. Do we really need to translate "Factor" when none of the big measurements will (Regents, SAT, ACT, GRE, GED, AP, IB)? I remember taking languages - the instructions were always in French (or Latin, or German, or Japanese - I took lots of language courses) - you just deal with it and then suddenly, it's no longer a problem.

The multi-language Visual Glossary looks really cool. I'd get one of those. The same glossary in ten common languages: English, Spanish, Chinese, Vietnamese, Cambodian, Laotian, Arabic, Haitian Creole, Russian, and Portugese. Quick aside: I once had two students who couldn't speak English - a Chinese girl and a Japanese girl - they could write to each other but couldn't understand the other's spoken language. The Kanji for many things was identical, even though the words for the pictograms were different. Try it - it really brings together two groups who don't ordinarily get along and gives them a feeling of security in a sea of doubt.

384 pages for a note-taking guide? Couldn't they have simplified this a little? Anything greater than 2 pages is too much for a guide to taking notes. "Isn't this the antithesis of note-taking?" I asked myself, so I went and previewed it.

I ran into what I would term "educational nannyism", by which I mean materials that try to do too much, that attempt to write every letter, word, symbol and expression. The teacher would photocopy these pages for the students who fill in a few blanks and then save them to their binders, completely eliminating the necessary mental steps of assimilation and consolidation that comes from the note-taking process. It's very much like writing a lab report by filling in the blanks in a prewritten template.

The whole thing is done for the student except for a few blanks and an extraneous table at the end - it has no purpose in this problem and there isn't any room to put in the extra three problems which would make the rows necessary. Weird.

I figured that I'd find PseudoContext but I was surprised at how much of it there was and how easy it was to find it. I randomly entered page numbers in the preview thing but never had to go looking very far - mostly I went to the word problems (the "problem-solving" section ) of whatever topic I landed on and was able to find at least one whopper in every set.

Seems a bit more accurate than most mountain climbers could accomplish and I really don't like the casual use of the mountainside in the picture. It's too much of a visual to be ignored and it only causes confusion in the viewer: is this the line required? Most students are going to want to put the altitude on the y-axis as the picture shows; why did they label it this way? Then, the instructions are more detailed and specific than they need to be. A simple list of the data and a question about the temp at the top would have sufficed. Also, way too many data points - they're bashing you over the head with 4° per 500 ft and way too much regularity in the points - how about base of the mountain, edge of the meadow and treeline? BTW, how many mountains go from sea level to 14000' like that? That picture is maybe 6000 feet. Get a better image.

Wow, I hate this question. (1) A modern manufacturing plant can make a few more than 60 printers a day. (2) The profit is in the ink, not the printer. Some printers are sold below cost for just that reason. (3) The labor estimates are way off. (4) You don't just turn off a laser printer line and turn on the inkjet line at random.

Get some better numbers dammit. This math was developed to streamline manufacturing processes so don't shy away from reality.

Stop it. Give the regression equation if you must (but 9 data points would be cool, too) but then just ask the question. This is not an equation that is particularly good for synthetic division and factoring (

See it on Wolfram Alpha). There are so many questions that could have been asked with this but weren't. Shame, really.

I just laughed at that. "The cooling rate of beef stew is r = 0.054" - How in the hell is anyone supposed to know that and why would anyone in RealLife™ approach it that way? Wouldn't three time-temp data points have been better, more realistic?

This is so back-asswards. The height of the dock above the ground is related to it's width? It's usually 48" but differences in truck bed height will change that - the RealWorld™ makes this problem stupid and knowledge of the RealWorld™ gets in the way. The ramps are differently sloped - has the writer never actually seen a ramp before? This is a simple volume problem made unrecognizable to any RealWorld™ dweller. The only reason it's here is to somehow bring polynomials into a problem that doesn't actually need them.

Finally. A RealWorld™ problem. If only they had done three resistors in parallel ...

That's all I have on this book. It's still better than what I have to deal with, so I'd get it if the choice were mine. Replace the stupid questions, install better things using my website and various online resources.

Could work.

It's not as if no one ever noticed this before, but I really wish that History Channel would stop with the reality shows junk. I don't want them to go back to WWII all the time, but come on, there's no history on during Prime Time on History.

It's not as if no one ever noticed this before, but I really wish that History Channel would stop with the reality shows junk. I don't want them to go back to WWII all the time, but come on, there's no history on during Prime Time on History.

A comment on a Joanne Jacobs article:

And here I thought I was supposed to be teaching math.

I don’t believe VA is anything on which to base bonus or termination. “Seem to correlate” does not mean “cause” … and that’s for the best measurements.

What of all the poor ones? “Some of the early VAM methods were highly unstable” ("Unstable" is a charitable term for "Any resemblance to a consistent reality is neither implied nor intended.")

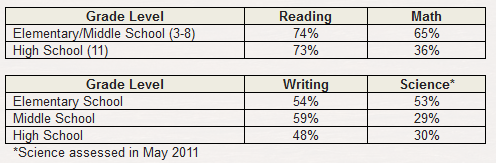

It means that the results cannot be trusted for grading the student who took them (that's stated plainly and explicitly in the administrator's notes) and it means that the test are worse at evaluating the teacher who didn't take them.

There are many issues with any kind of testing. What exactly are we supposed to be teaching and what results do we want out of it? What will we consider to be a success? Do the tests measure what we think they're measuring and does that result resemble the state of the student?

I am given a curriculum that I am to follow. The test is written for a different curriculum. Don't judge me based on something you tell me not to use.

This graphic to the right cleverly pretends that measuring a child's height is exactly analogous to measuring his grade level. Unfortunately, the accuracy possible in the one is not possible in the other. I would note with some amusement that the books he's standing on make even that height measurement into an exercise in systematic error.

States routinely tell the testing company to instruct the scorers that averages HAD to be in a certain range - any test scoring that ran counter to that pre-determined result was wrong. As Todd Farley describes it, accuracy is a fantasy.

It doesn't make sense to evaluate me based on a test given to a fifteen year-old kid who has only had me for a short while, who has failed again and again, who has attendance "issues", who's strung out on something ("self-medicated"), using a test that pretends to accuracy but fails miserably at it and rarely is aligned to the same curriculum that I've been required to follow.

What about Value-Added?